Great news, the Parallella is now a real computer!! The gigabit ethernet port is working and the full Ubuntu desktop version is up and running! We had some scary moments this week, but in the end everything worked out. Sometimes it’s really worth considering the old advice “if it ain’t broke, don’t fix it”. Amazing how the most innocent of design changes can cause such major headaches at times…

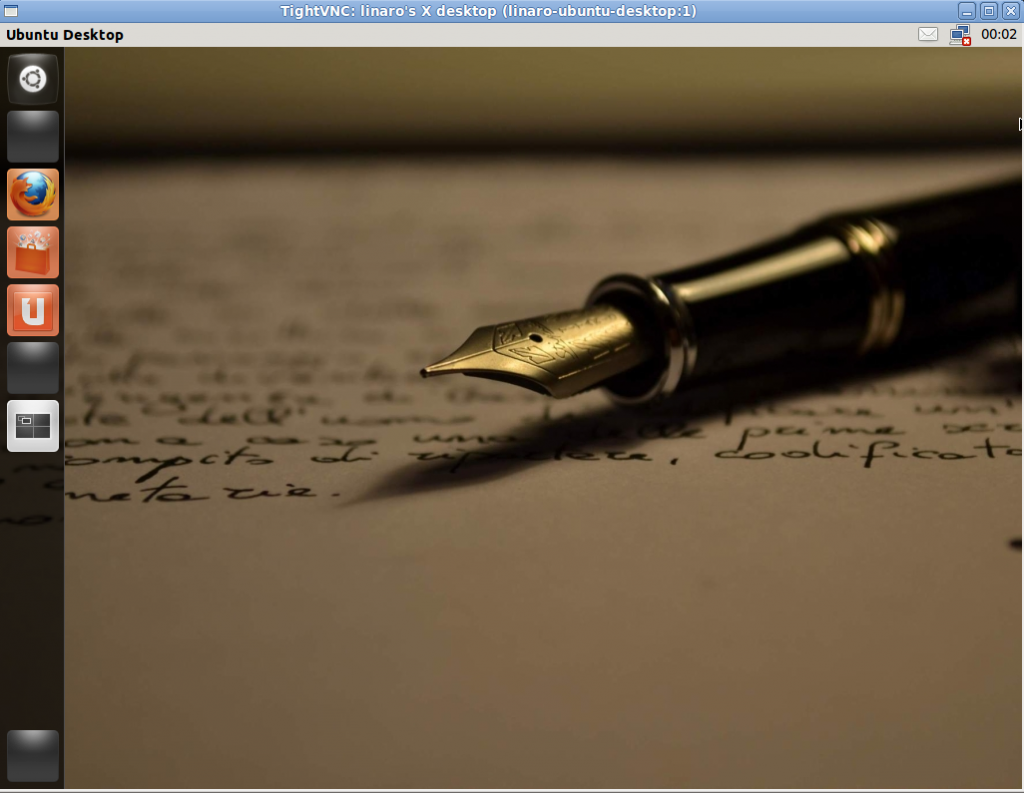

The picture below shows the Ubuntu desktop as seen within the TightVNC client running on a PC. At this point, the Parallella can be used as a “headless” low power server. The next critical step will be to ramp up HDMI and USB functionality so that the Parallella can be used as a standalone computer.

The next two weeks will be filled with addition board bring-up work and extensive Parallella validation and testing. We need to make sure any and all major issues are rung out before we send out the first 100 prototypes to Kickstarter backers who signed up for Parallella early access. At this point we are aiming to ship these early boards by the second week of June.

We will be posting some SIGNIFICANT Parallella related news over the next few weeks so don’t go far! The best way stay up to date on Parallella news is to follow us on twitter: (@adapteva and @parallellaboard)

Fantastic! It seems to be going unbelievably smooth so far.

CONGRATULATIONS ADAPTEVA!!!

I do not quite understand. The board you are working on is, in theory, the same as your prototype that worked. Why is it so hard to get it to work?

“In theory, there is no difference between theory and practice. But in practice, there is.”

I wonder how would this parallella boards perform with renderers cause renderers performance scale well with more processors. Blender renderers for example have support for many cores.

Its old renderer supports up to 64 threads and the new Cycles renderer may support even more so I wonder what the rendering performance would be in Blender with these little devils compared to lets say other processors such as Intels and AMDs.

Will these boards be good enough for such a task? I was thinking along the lines of creating relatively small affordable rendering farms with them. So let’s say that with such prices one buys about 4 of the 64 cores boards, one could load Blender in each one of them and set it for 64 threads. The cost of this would be in the several hundreds dollars. I wonder how would that compare with the performance of a PC that costs about the same.

Let’s see if soon anybody runs a test along this line to see how that goes.

It’s an illusion..we filter out the bumps.:-)

Nice:-).

As it is, in theory this board actually ended up being more complex than we had anticipated. Some things that had to be changed from the zedboard based prototype included: ethernet phy, usb phy, pmic, clock drivers, hdmi phy, and SDRAM.

Not terribly complicated, but enough changes to make for an interesting bring up journey. There have been 8-10 issues so far that had to be found, debugged, and worked around. I think getting to this point after 3-4 weeks of work is pretty good, but obviously if there are no issues and there is extensive pre-arrival preparation, it would be possible to bring up the board much faster.

@dade916 took some interest in the Epiphany for the Luxrender.(http://www.luxrender.net/en_GB/index), so I assume that raytracing might be a decent fit for Epiphany on paper. There will undoubtedly be porting challenges since most CPU centric code is too big for Epiphany and most GPU OpenCL make extensive use of global memory (which is a bad thing for Epiphany).

This is very good to hear. I plan on using it as a small Ubuntu “server” over ssh most of the time. Looks like that is already working.

+1 for Dade and Epiphany

http://www.luxrender.net/forum/viewtopic.php?f=17&t=9031&p=87762#p95331

Same here, Rahul. On the other hand, I would like to use the HDMI connection to use it as a Full HD media player too!

Congrats Andreas, these things are often made to look easy but aren’t. So enjoy the moment (I am saying that probably cause I’ve been thier).

BIG data sets (regarding raytracing), is an issue that has been their a VERY long time. I became familiar with the issue when I was looking at solutions to solving the performance challenges of raytracing back in the sub gigahertz processor era. A lot of people ‘forgot’ this issue as huge performance and memory increases of super scaler machines made such issues “go away”. However that made a lot of people ‘fat dumb and happy’ about software (IE lazy sloppy and negligent with their developement). This is an old problem given new focus.

The solution has always been ‘increase the clock and memory’.That can’t always happen. Ray tracing although considered an embarassingly parallel problem turns out to be a serious BIG data problem, AND a serious stream processing one as well. It’s not a classic example for just one type of problem (that’s what I found out at least). It has a mix of image processing paralllelism and stream processing (what do you do with the data after you make it, just a hint).

If one considers a bit map must be converted from a compressed JPEG (DCT compressed YUV data) to RGB to be used in a texture, the amount of data (LITTERALLY) explodes. As a simplistic example a typical 1024×1024 JPEG texture, actually becomes an array of double precision RGB tuples. So that innocent 100k file becomes 24megabytes in raw data as 1 texture (say that is just the pupil in the human head you are using amongst about 30 or 40 other textures in the scene).

Then look at a typical mesh that is say 32 x 32 points has UV cordinates and surface normals (that uses said texture). That ends up being XYZ tuples UV tuples and 3d vector tuples. (8 qwords per point in other words) so 64 bytes per point 64K for a SMALL mesh. (your typical model these days has as many as 32k vertices when reduced so we are talking a minimum 2megs in just a single model’s vertices alone).

It can get quite unwieldy quickly.

Anyhow it’s great you have it doing something more at least. A raytracing OpenCL solution might take a bit more time however (just a suspicion).

What’s the new hdmi phy part # ?

ADV7513BSWZ

and so on…. I wondering if this kind of SoC culd be stackable for example in a supercomputer like this one

http://www.southampton.ac.uk/mediacentre/features/raspberry_pi_supercomputer.shtml

🙂

I simply cannot wait to get my hands on one of these boards and start hacking.

[…] http://www.parallella.org/2013/05/11/the-parallella-board-now-runs-ubuntu/ Parallella Linux Supercomputer Share this:EmailPrintFacebookTwitterGoogle +1RedditDiggStumbleUponMoreLinkedInLike this:Like Loading… […]

Hi,

Great job guys, I am very excited to see this project. Can someone post a video of ubuntu startup ? What is hdmi output max resolution ?

Thanks! We want the first live video to be somewhat impressive (not just booting) so we are holding off a little longer. HDMI is not up and running yet, but we are working on it.

As someone who has been working on both cluster-CPU and GPU Monte Carlo simulation codes for radiation transport for awhile, I am looking on eagerly to implementing them on Parallella. This seems like the perfect tech for revolutionizing medical dosimetry and CT/x-ray imaging.

…More than that, I am super excited for the possibility of running simulations on a cluster of machines that fits inside a cereal box and draws less electricity than an incandescent lightbulb — sure beats a couple racks and a couple kilowatts.

Best of luck! When everything works out, you guys are getting a shout-out in my thesis!

You guys are about to change the world. I can’t wait.

Thanks for your reply !

It would be better you guys run rockie Debian: strong hardware despites the best distro…

Another good occasion lost!

My guess would be that it’s not a huge deal to move to another distribution that leverages the same version of the Linux kernel. Is this a correct assumption? Thanks.

Based on what we have seen (and from we have heard) it shouldn’t be a huge deal for someone familiar with the distro in question. The hardest part will be to pick one without upsetting all the others. We won’t be able to support very many Linux distros by ourselves.

My interest here in the lowest level drivers and bare metal programming. My idea is to model the hardware using “Soft Circuit Description Language” and then synthesize runtime code from these graph-based models and build up on top of that. http://encapsule.org explains the broad vision. But, I didn’t learn about this until after the Kickstarter campaign ended so I expect it will be awhile before I get my hands on a board (I don’t mind rework wires BTW 😉 Cheers – happy and productive hacking to you all.

We don’t need to upset any distro actually. IMO any distro would suffice if it totally supports parallella’s SDK i.e. we can get out the full juice out of those sweet epiphany cores 🙂

Many Congratulations to the Team at Adapteva!!!

Keep em coming :p

I am very impressed with such a short board bringup cycle. I think you are inspiring me to try to tackle the fq_codel bufferbloat fix-ethernet-in-hardware problem on the zynq-7000. (I know that’s different from your target market, but have you considered building a board that had multiple 10GigE ports? Want to stick more intelligence in the network stack…)

i hope asap will be ready debian on parallella. Ubuntu us too much “vanishing”, to much “rolling 3d cubes”, to much “wannabe”.

Parallella deserve a stable-along.the-time, rock-solid, distro-for-who-know-how-to-work. Give us a debian on parallella, no matter if headless and controlled only by ssh: we need the muscles of 16 cores, just that. Unity, bleading edge office suite or other “desktop” stuff are completly useless to us. (IMHO)

Please do not write “us” if you mean “me”. These letters are not even close to eachother on your keyboard. The big issue with debian is the way it handles codecs. Or rather the way it doen’t support them.

Hi! I read about Parallella at this right moment. Congrats for the project, I agree with Ray Renteria, you guys will change the world!

I understand which with Parallella we’ll can use the web and work with open source OSs, like Ubuntu; but I’m not a “hardware guy” and I don’t understand things like:

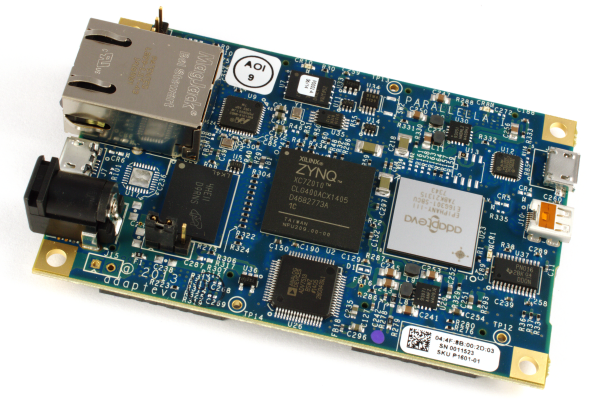

– The image of this post the shows a credit car size board; but in the KickStarter video, was a bigger thing. I’m confused.

– It’s possible to install other OSs? If yes, how? Only by USB?

– It’s possible to play games with Parallella (even the new ones)? The performance is as how to use GPUs?

Regards!

I am really want to buy one!!

Are you still on track for a June shipping ?

Hello Andreas Olofsson:

I just found out about your super project. I’ve been waiting 30 years for a truly affordable massively parallel General CPU architecture. I have a software architecture concept that I has been thrashing around in head all that time. The concept is exceptional in that it does not try to divide current programming schemes into tiny portions to be massively farmed out in parallel. Instead it includes a new form of requirements engineering, and system design paradigms to describe standard business applications in a way that can be massively processed.

The real question I have here, is where can I find the specs on your chips. I would be interested in things like amount of local memory per chip, and the communication / scheduling abilities for individual cpu’s.

Jerry.

Hi Jerry,

Please see the Architecture Reference and 16-core chip datasheet:

http://www.adapteva.com/wp-content/uploads/2012/12/epiphany_arch_reference_3.12.12.18.pdf

http://www.adapteva.com/wp-content/uploads/2013/02/e16g301_datasheet_3.13.2.13.pdf

Regards,

Andrew

@tarciozemel,

The board shown in the video was simply a prototype, and the one picture at the top of this post is the final form factor.

The operating system lives on a microSD card which you can prepare using another computer. Linux is the officially supported O/S but it should be possible to port NetBSD, FreeBSD and other operating systems.

You will be able to play games on Parallella, but I’m not a gamer and couldn’t comment on which and performance etc. However, I would say that if you are thinking along the lines of a game using the many-core accelerator, it would need to be developed/modified to use this.

Regards,

Andrew

Would parallella be able to run a hypervisor or virtual machine monitor (VMM)

A quick Google search suggests there is Xen for ARM/Zynq but you’d need to look into the status of support, and there are proprietary hypervisors for ARM also.

Wondering if ubuntu is utilizing all cores. How high can you go with RAM?

When will it runs on Ubuntu Server? Will it able to run?